Get your job done faster

We’re Changing the way of performances

There are many storage systems may deliver throughput performance, but not able to scale across thousands of parallel streams at sub-millisecond latency

A XingFS node has a couple of software components. The proprietary local device manager and filer, that bases on metadata/data disks, provides a local namespace, and block I/O service. The global volume manager aggregates all the local namespaces as a cluster-level filesystem. Cluster manager maintains the nodes to improve availability and robustness of the whole cluster. The NAS protocol services directly hook in each request to the global volume’s APIs to improve the performance. And CLIs and GUI portals are for users to maintain the cluster and filesystem. The relationship of those components is shown in the below figure.

Read More

|

WHY XingFS

Cost-effectiveness

Under same bandwidth, XingFS only required half the number of hard disks compare with others, the total cost is greatly reduced.

Nodes and Network

XingFS features symmetrical and share-nothing architecture, in which the core is a distributed filesystem and clustered NAS. All the storage servers in a XingFS cluster are of same hardware configuration. A storage server in the cluster is called node hereinafter. A XingFS cluster consists of 3 to 255 nodes and scales by adding equivalent node(s) to get larger capacity and higher performance

Read More

|

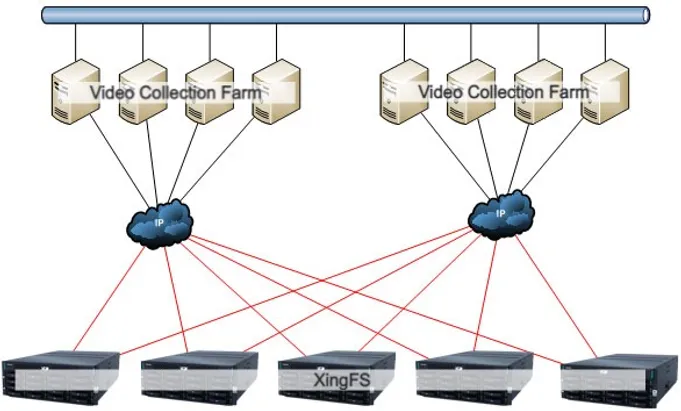

A XingFS cluster is depicted by the below diagram. The nodes in a XingFS cluster are connected by the backend network. Data, metadata and cluster commands are transferred with it. It bases on Ethernet, and generally has two switches to improve the robustness. The addresses on backend Ethernet ports, by default, are in a self-managed IPv6 pool. Enabling RDMA on backend network, if it supports this function, can highly improve the performance |

Case Study

Which industry are using XingFS?

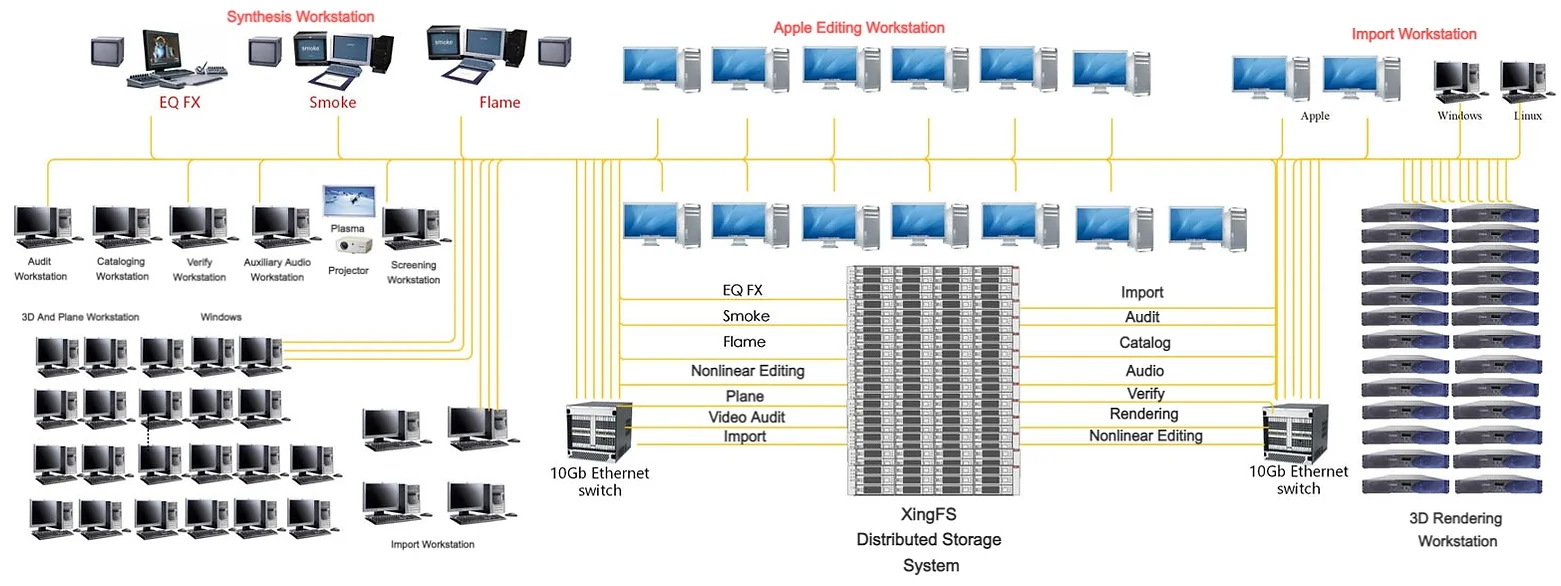

Video Content Audit

Video Surveillance Item

Multiple application server clients record video 7*24 hours throughout the year, and the retention period is relatively long. Need to ensure sufficient storage capacity, easy to expand, relatively high bandwidth requirements and many concurrent reads and writes.

Nonlinear Editing

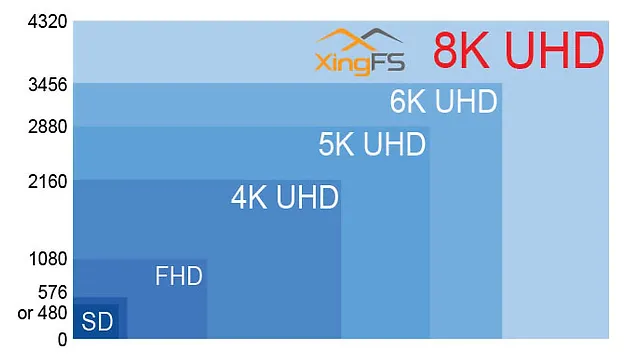

The non-linear editing system requires the storage system to have a very high continuous and stable bandwidth, especially in the context of the current broadcasting requirements from high-definition (1080) to ultra-high-definition (8k), the requirements for stable bandwidth are gradually increasing.

Product Advantages:

- Strong Concurrent Access Capability

- Low Access Latency

- High Aggregate Bandwidth

- Strong Bandwidth Stability

- High Scalability

Features & benefits

| The Xing FS distributed storage system supports high-speed networks such as 10GbE, 25GbE, 40GbE, 100GbE and InfiniBand. Use distributed hashing algorithms to locate data in storage pools instead of centralized or distributed metadata server indexes. All storage systems can intelligently locate the access path of any data, solve the dependence of distributed storage on metadata servers, eliminate single points of failure and performance bottlenecks, and truly realize parallel data access and real linear performance growth. |  |

The capacity of the XingFS distributed storage system can be expanded to hundreds of PBs, and online expansion is supported without interrupting the running business during the expansion process, and the entire expansion process can be completed within a few minutes. The distributed storage system expands horizontally through new nodes, and immediately obtains higher aggregation performance and larger capacity after expansion. Users can purchase configurations on demand, and as the buasiness grows, they only need to purchase more nodes, which will greatly reduce the user’s purchase and use costs. |

|

The XingFS distributed file system processes the file data and stores it on multiple hard disks of multiple nodes after the data is multi-checked, so as to ensure that the data can always be accessed, even in the case of hardware failure. . Self-healing restores data to its correct state, and repairs are performed incrementally in the background with little to no performance load. |

|

|

|